Test Suites

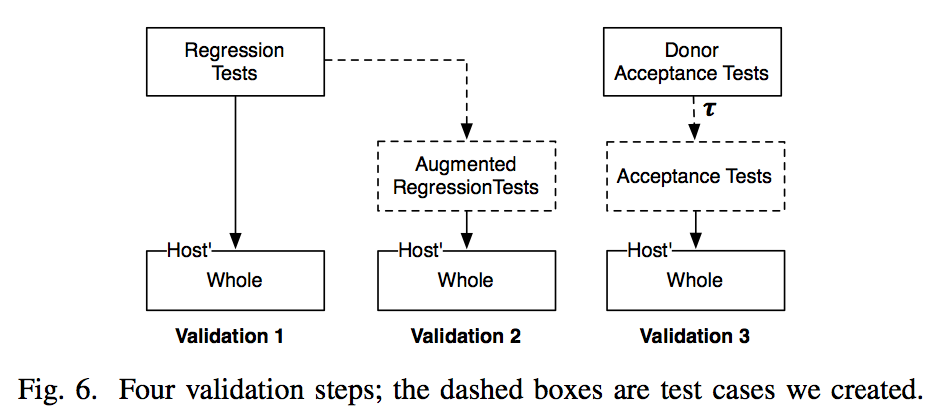

We use three different test suites to evaluate the degree to which a transplantation was successful as shown in the figure below. 1) the host’s pre-existing regression test suite, 2) a manually augmented version of the host’s regression test suite (regression++), and 3) transplant beneficiary acceptance test suite, manually updated to test the transplanted functionality at the system-level.

Below you can download the ice box, acceptance, regression, and augmented regression tests we used in the experiments reported in our technical report. We created ice box, acceptance and augmented regression manually.

Ice Box Test Suite: For creating the ice box test suites, we used the original donor programs to obtain test oracles. Then we used the check framework to generate the ice box test suites, downloadable below. For example, in the case of TuxCrypt we first encrypted a file with the original program. In the check unit tests, we then compared the file obtained by executing the organ against the donor using the same encryption key.

Generating the ice box test suite was not very hard process. The parameters of the unit under test are the variables of the host, at the insertion point of the transplant. The behaviour of the organ is asserted with the one of the original host program. As it can be seen below, the user does not have to know too much about the donor program. We suppose that the user knows the host program, so the user should have some knowledge about its variables. The WebServer donor is an exception. It requires selecting a port to run the server at random. Because of this, for running the test cases, we manually stored the port in a global variable. This variable was then accessed in the test cases.

The time to generate the ice box test suites ranged from 15–120 minutes. The required time was usually closer to the lower bound, with a high variance for the case of WebServer transplant (caused by the difficulties related to obtaining the port address and testing the web server). Despite the fact that test generation can be very hard, even the upper bound of this range is low. The reason we were so efficient is that the most of the source code knowledge needed is related to the host program. Also, even a small number of test cases proved to be efficient in generating a good transplant. In practice the quality of the resulting organ was assured by the system level testing: acceptance and regression tests.

Acceptance Tests: For the acceptance tests we again used the check framework. These tests are aimed at testing the grafted functionality. This kind of test actually test the quality of the transplant, and are required for considering a transplant to be successful. We have generated these tests, following a similar process to the one used in ice box tests. The difference is that now we test the entire program, rather than just the grafted organ. These tests were easier to generate, considering the fact that the user knows the host program. Again, the original donor program served as oracle.

Augmented Regression Tests: In some cases, the available regression tests (on the website of the host program) did not achieve significant coverage, nor execute the organ. We augmented the original regression test suites.

Note: To replicate our experiments, please use the experiment scripts provided here on the muScalpel page.

The test suites can be downloaded from the list below.

- Cflow Host

- Pidgin Host

- SoX Host

2014 © Earl T. Barr, Mark Harman, Yue Jia, Alexandru Marginean, and Justyna Petke